How opinion polls create the future

Imagine Hugh Grant and Seán Connery are in the same class at school. They have a maths exam in six weeks and whoever gets the higher mark wins a gold star. At the end of the first week, they have a wee class test on which Hugh scores 50 and Seán 28; Hugh spends the next couple of weeks wearing a smug, self-satisfied grin and wondering whom he’ll invite to the Hunt Ball while Seán kicks stones up and down the lane swearing under his breath. In week three, another test and it’s 44-35. Hugh’s still not too worried but Seán starts to think he might have a chance. Week five and, with just a week to go to the big day, the final class test sees Seán score 51 and Hugh 49. Suddenly Hugh starts to panic and gets all his friends round to help him cram while Seán now feels he has some crucial momentum. But who’s going to get the gold star in the end?!?

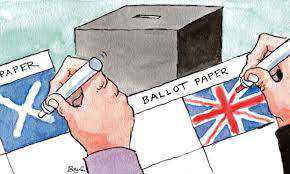

On the 18th of September 2014, the people of Scotland were asked whether Scotland should be an independent country. As all politicians say, that’s the only poll that matters. However, small numbers of people in Scotland have been asked the same question by newspapers, vox pops (or voces populorum), academic researchers, and people on social media sites for ages. Political opinion polling is big business, and political journalism is now unimaginable without it. However, some more passionate of our statistician friends have been moved to tears by the way assumptions go unacknowledged and mathematical conventions unfollowed in the reporting of opinion polls.

Margin of error

The margin of error in an opinion poll refers to the risk that the small group of people sampled isn’t quite like the large group of people they’re supposed to represent. In a simple random sample of 1,000 people it’s about 3.1%. Incidentally, in order to improve the margin of error to about 2%, the sample would need to be 2,000 and for a 1% margin of error you’d need about 10,000 but no one is prepared to pay twice or ten times as much to conduct a poll for what they see as such a tiny return. On top of that, pollsters use designs that weight for demographics, historical patterns in polling, and other factors, all of which can have the alter the sampling error.

Anyway, all we’re going to get is polls with 3.1% sampling error but even when it’s a binary outcome, like ‘Yes’ or ‘No’, calculating whether there’s a statistically significant difference isn’t a simple matter of adding or subtracting the error term: it’s the sampling error of the difference between the scores that matters. Most people couldn’t be bothered figuring all that out but it’s p1 + p2–(p1–p2)2 according to Daniels et al. (2001). A 3.1% margin of error means just means that we’re 95% certain that the actual proportion of people who would actually vote ‘Yes’ falls within a range of values 6.2 percentage points wide, but tells us nothing about the ‘Nos’ and the ‘Don’t knows’. In a famous YouGov opinion poll for the Sunday Times that showed the ‘Yes’ side with a 51-49 lead, we can be fairly sure that we’re not actually sure either way, though an earlier YouGov poll showing a 22-point gap in favour of ‘No’ was a bit more clear-cut, even after the exclusion of undecideds increased the error term.

What’s the point of polls then?

For non-statisticians, the question is whether opinion polls reflect or affect public opinion, and we’re supposed to think that they are only describing what’s actually happening. The assumption is that nothing will change between opinion day and polling day. It’s a rare politician, though, that thinks they can’t change something so every new poll ends up being a catalyst for more feverish campaigning. That campaigning relies on spin, an impolite word for interpretation, which is why you’ll hear at least three different versions of the same truth that underlies a given poll. The room for manoeuvre in the margin of error is the space where debate can occur.

The rules of engagement for campaigning seem to be that we’ll all talk about the polls as if they were meaningful, even though the results of a lot of the polls you’ll see are in that grey, errory area. The interpretation of opinion polls over time often refers to people changing their minds, though there’s no basis for this claim because they don’t ask the same people. What polls actually do is raise awareness, generate opinions, and generally cause people to act as if something important has happened, even though there are no gold stars for good poll numbers. They do not predict the future; they create it.

So let’s call a poll a poll. It’s just like wee Hughie and wee Seánie’s end-of-week class tests to see how they’re getting on before the big exam. The mock exam isn’t exactly like the real one: It’s shorter, with questions drawn from a smaller pool, and some material they haven’t yet covered in class so it doesn’t really matter if it’s not all that accurate. Crucially, it gives Hugh and Seán a chance to react and how they react will determine how they get on in the exam. By the time you read this, the people of Scotland will have decided and, just as you burned your study notes straight after graduation, no one will be talking about opinion polls any more.

Sign up to receive our weekly job alert

Featured Jobs

The London School of Economics and Political Science (LSE)

London, UK

March 08, 2026

European Central Bank

Frankfurt am Main, Germany

March 02, 2026

South Yorkshire Mayoral Combined Authority

Sheffield, UK

March 01, 2026